Storyweaving with AI

Synthesizing lore for a 3D Internet and decentralizing Hollywood.

What inspired early explorers to take the dangerous journey across the great oceans? We need more than a technological foundation to connect virtual worlds together. The open metaverse is also a cultural phenomenon emerging in terms of coordination and alignment. There has to be compelling stories that can guide us all in the right direction.

Storytelling is one of the most ancient and powerful forms of communication. When a story captures our attention and engages us, we are more likely to absorb the message and meaning within it than if the same message was presented simply in facts and figures.

For the past few months we been using GPT-3's help to craft an overarching story basis for the open metaverse. The script is based off what would make an awesome entertainment product, show, or movie to help explain the complex concepts that we fed it about the metaverse.

The results have been really good and super meta.

Part of making it true to the theme of being meta is to also involve the audience and platforms in the creative process. Everything about the story's brand is being made open source with CC0 and CC BY-SA rights for the assets so that others can build upon the basis we generated.

It's time to share some details on what's been cooking.

Collaborative Storyweaving

NFT projects are starting to take storytelling more seriously from the start, a breath of fresh air from value being based on clout alone. These tokens have some story-line significance in a fictional universe that's being created. What's interesting is how the stories being made aren't coming from the usual top-down but rather the bottom up by the community.

For example, one of the coolest parts of the Stoner Cats road map is how the NFT grants collectors exclusive access to be behind the scenes of the creation process, including regular story-boarding sessions with the creators. In addition, other token holders can participate by voting on story elements.

During the past few months we have been working on a media project powered by NFTs. Contributors and collectors are both able to play a part in the creative process for shaping the story. Every season is a mini virtual production that interweaves various NFT projects with unique characters and lore in addition to a fun experience.

There's been perhaps 100 versions of the script made by now because it needs to be compelling, funny, and extremely unique. Quality is imperative to inspire other projects to come into the mix. We've been getting help from GPT-3 which has crazy good ideas and suggestions. It somehow shouldn't come as much of a surprise since its been trained on award winning scripts, but its still mind blowing when you use it.

The main story line is set inside a virtual world populated by semi-autonomous AGIs where anything is possible. It's the last iteration of the world with human level intelligence, the next iteration will be incomprehensible. There's a group of AI kids that set off on a journey up The Street to seek the meaning of life.

Themes explored include runaway technology, future of creativity, metaphysics, growth, ethics, coordination, interoperability, and immortality. Parallels to the real world are interwoven and explored.

Narrative devices such as drop storms are a convergence point between the real and virtual in which NFTs we mint literally fall from the skies into the simulated world. The items discovered by the AIs can then play off of in the ensuing story line.

This is only the tip of the iceberg.

Remote Production Studio

We're synthesizing the mythology and digital artifacts representing the open metaverse movement together while having fun on Discord and earning SILK,

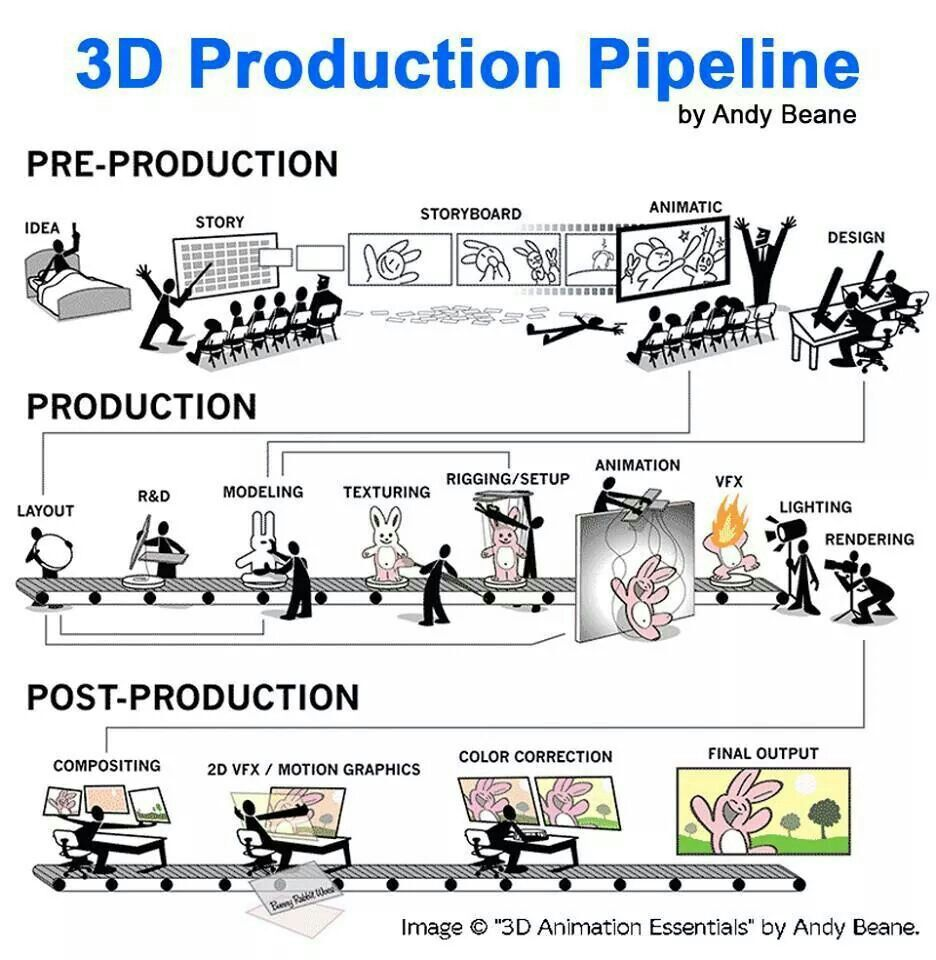

Our goal is to make it easy to involve other people into the creative process. Part of doing that is to show you how the pipeline looks so others can easier see where they can contribute value.

The current pipeline looks something like this:

- Initial lore / script (who/what is it, where it fits in the story, etc)

- Moodboard / shotgun design inspiration

- 2D concept art

- 3D kitbash

- 3D refinement

- Final output and mint

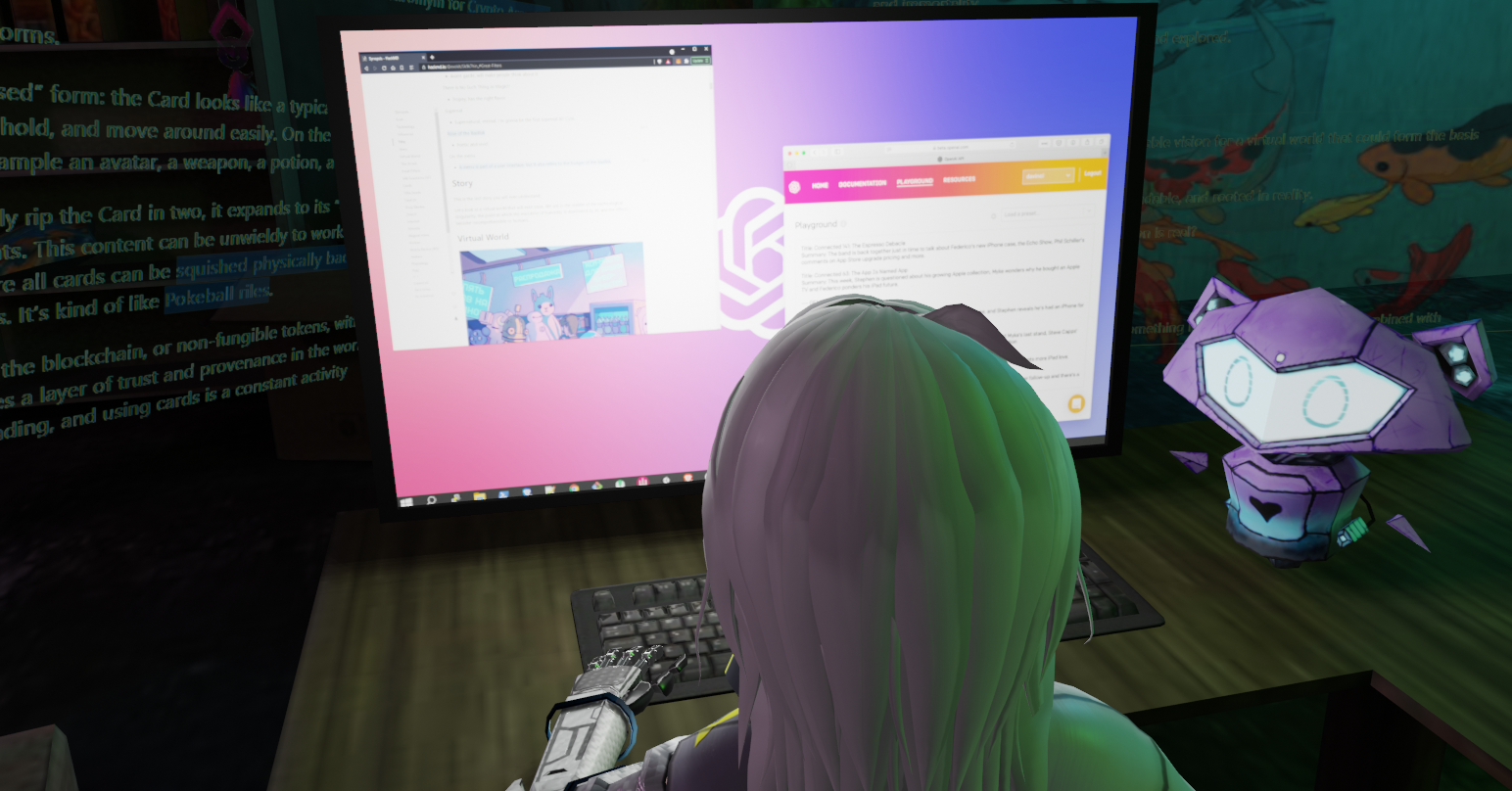

Discord and Github are the main channels we use for open participation.

Discord is best for asynchronous and synchronous communication for brainstorming, getting fast feedback, quick file transfers, and screen sharing.

Things can sometimes get lost in between multiple conversations happening on Discord channels. The new threads feature sorta helps, but its not ideal for getting outside input from people not in the server.

Github issues act as a place to keep threads on what's currently being worked on, with regular updates and feedback being posted to them.

Lets break down the steps in more detail.

1) Initial Lore Concept

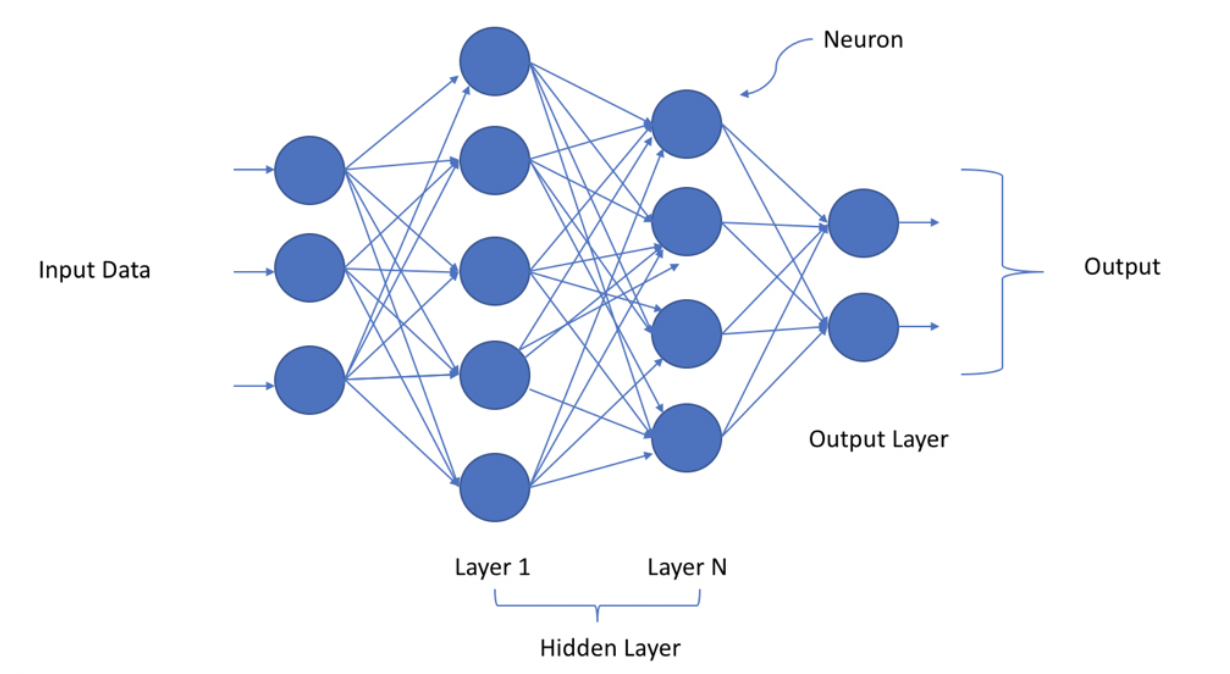

The lore is how we synthesize rules of the metaverse story, collaboratively on a Wiki, and get rewarded for it while having fun on Discord. The use of GPT-3 (or sequels) is a legit way for us to generate better-than-search results when filtering our ideas together from a set a guidelines.

It also helps resolve merge conflicts and disputes over what ideas make it into the master branch. Imagine the input data are people's ideas about a new concept or feature, and the AI as combing these threads through the loom into outputs that can be interwoven into the lore.

You don't have to be a programmer to use AI to make your life easier, especially if you have a good imagination. The experience is somewhat like having a real-time conversational creative partner helping you to better shape your ideas.

Others describe it like having access to a synthetic version of Twitter in which you can get feedback and thought completion on-demand for anything you want. The way you talk to it is by supplying enough context about what you're thinking about into the prompt for it to generate new ideas that complete your thoughts.

GPT-3 can also provide useful feedback by adding Summary:, Review:, or tl;dr: at the end of a prompt. It's like saying, "Hey OpenAI, what do you think about this part?" and getting a paragraph or two back of insightful answers.

The good outputs get saved into a lore text file, collaborative document, and dumped into a discord channel for further review in a process that's referred to as script doctoring.

A script doctor is a writer or playwright hired by a film, television, or theatre production to rewrite an existing script or polish specific aspects of it, including structure, characterization, dialogue, pacing, themes, and other elements. Source: Wikipedia

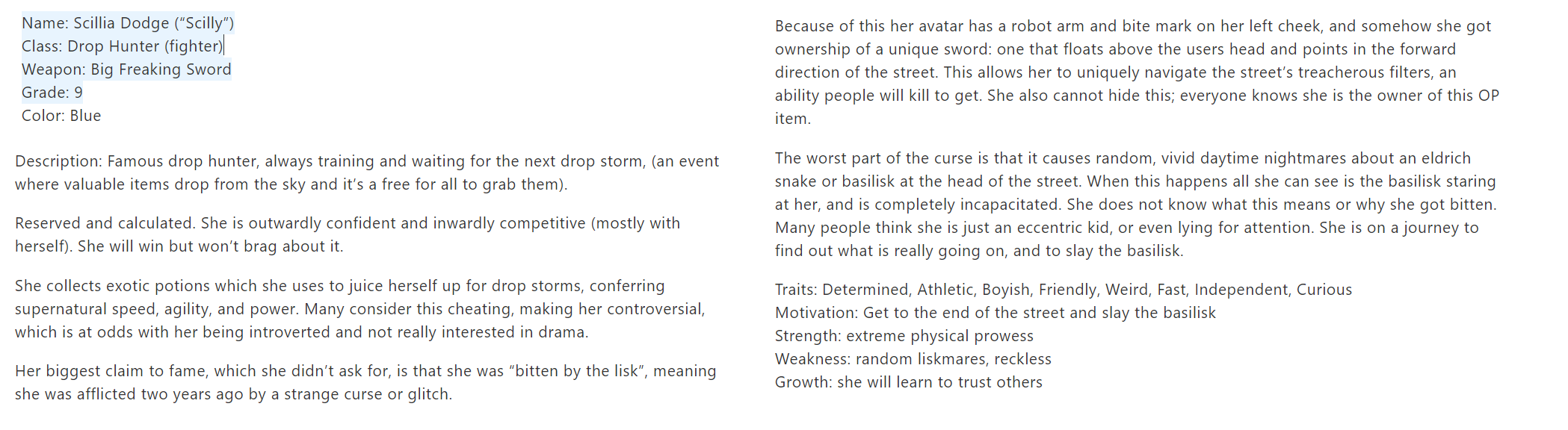

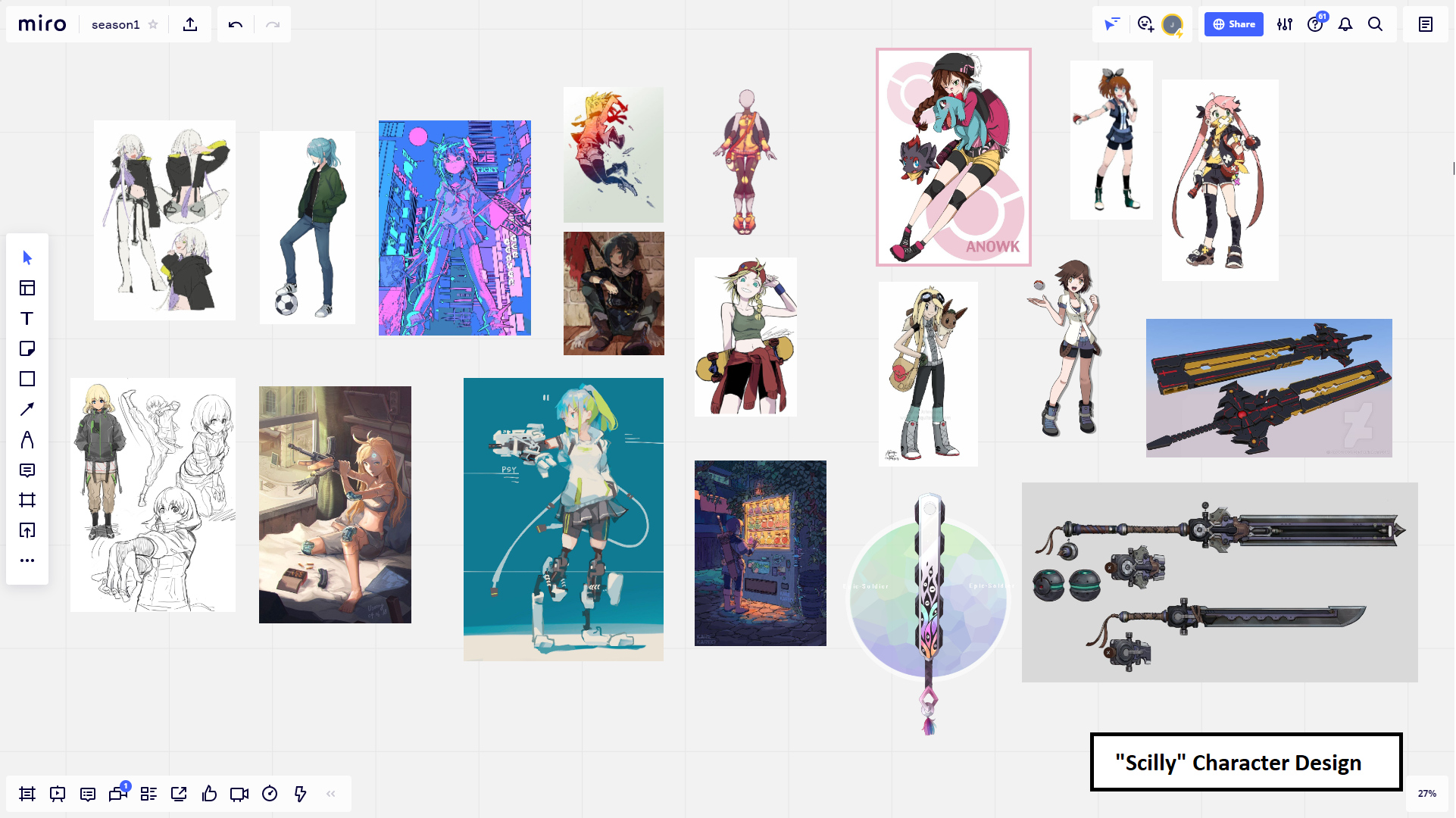

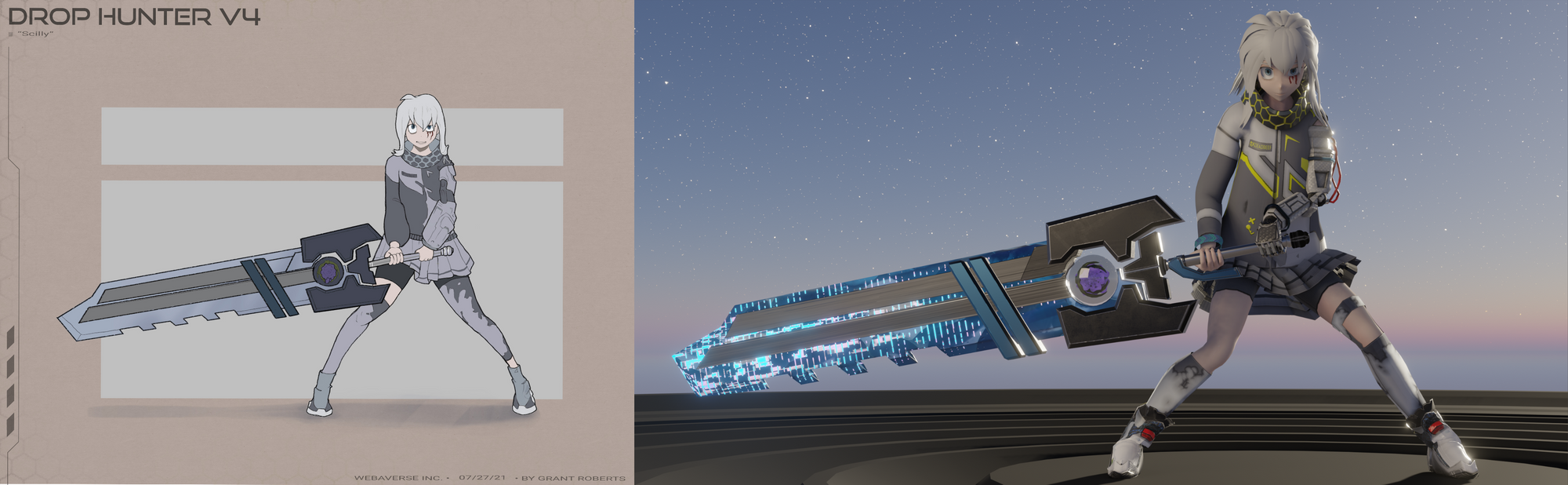

We now have approximately 500+ pages of amazing lore that's ready to use for script writing. Here's an example of doctored lore for a character and her weapon:

Since these tools can't tell you what parts to keep or throw away, it's on us to set the vibe and hint it in the right direction to get the things that we want. We're constantly playing an editorial role for curating the best parts. Afterwards, we need to come up with images for all the things it came up with.

The input data represents individual contributions to a new concept which AI will then synthesize in the greater context of the whole fabric and output really creative suggestions.

Thousands of creators will get an intelligence boost to their servers once we hook up GPT-J, the open source cousin of GPT-3, to our discord bot. If you're interested in this part and have a way with words, check out the scientist role in the Webaverse docs to learn how you can get more involved.

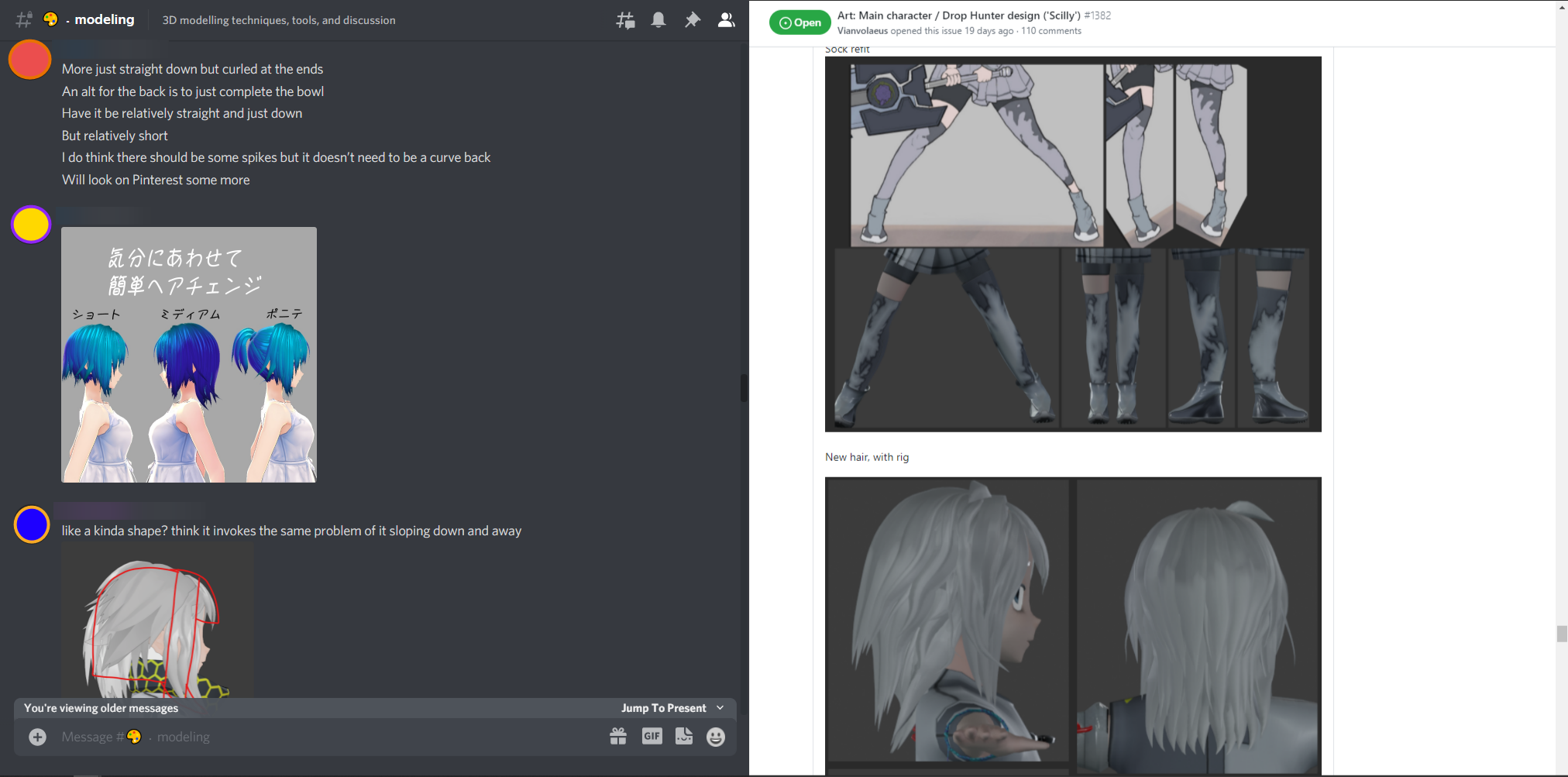

2) Moodboard

From the lore we now look for visual materials that evoke the style or concept. Pinterest.com is an amazing resource for this step. The materials are usually kept together with the lore on a HackMD pad, Pureref file, or Miro board until it's ready to transfer into a Github issue: https://github.com/webaverse/app/projects/5

3) Concept Art

The next step is illustrations that synthesize concepts from the moodboard and lore. Usually this is the role of a 2D concept artist, but with enough references a seasoned 3D artist can try their hand at it also.

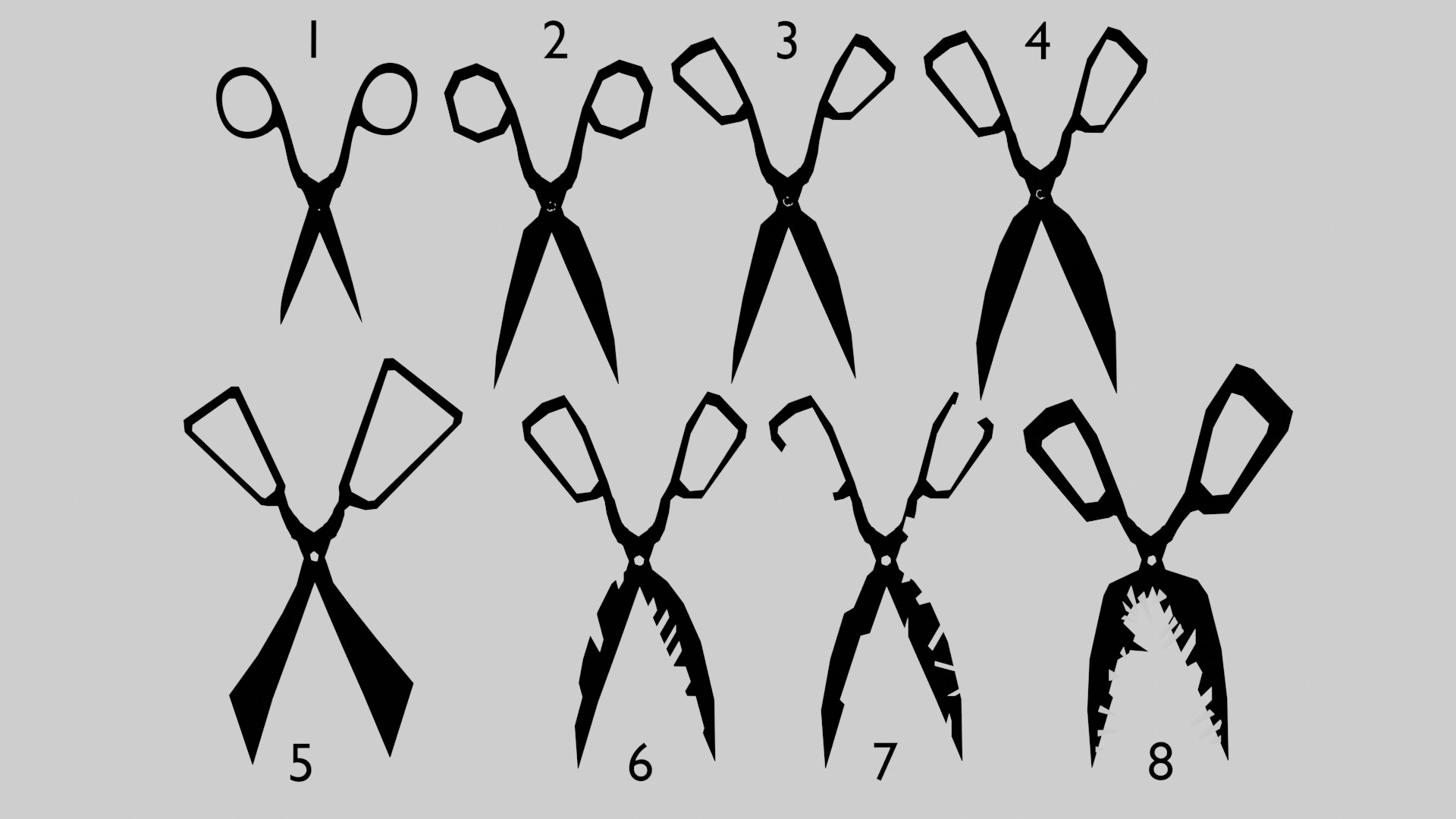

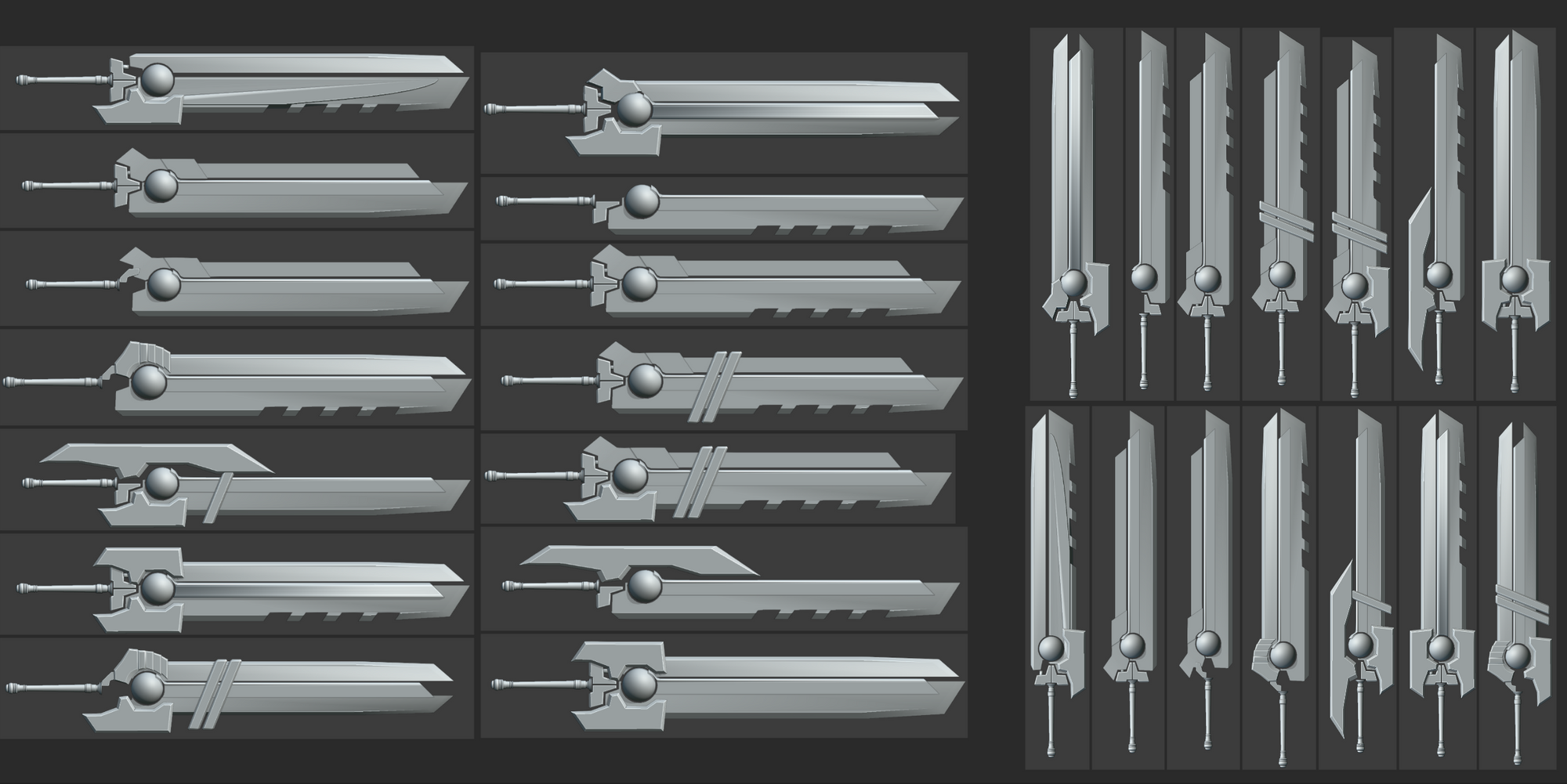

Similar to the curation process of generating lore with OpenAI, it's better to have more choices when picking the design. The image below which was used to create a weapon for another main character.

For a talented 3D artist it's sometimes faster to model many variations of the item.

4) 3D Cycle

While working we constantly posting screenshots to Discord, Github issues, and hold weekly meetings to go over the art. Each cycle gets us closer to final pixel quality.

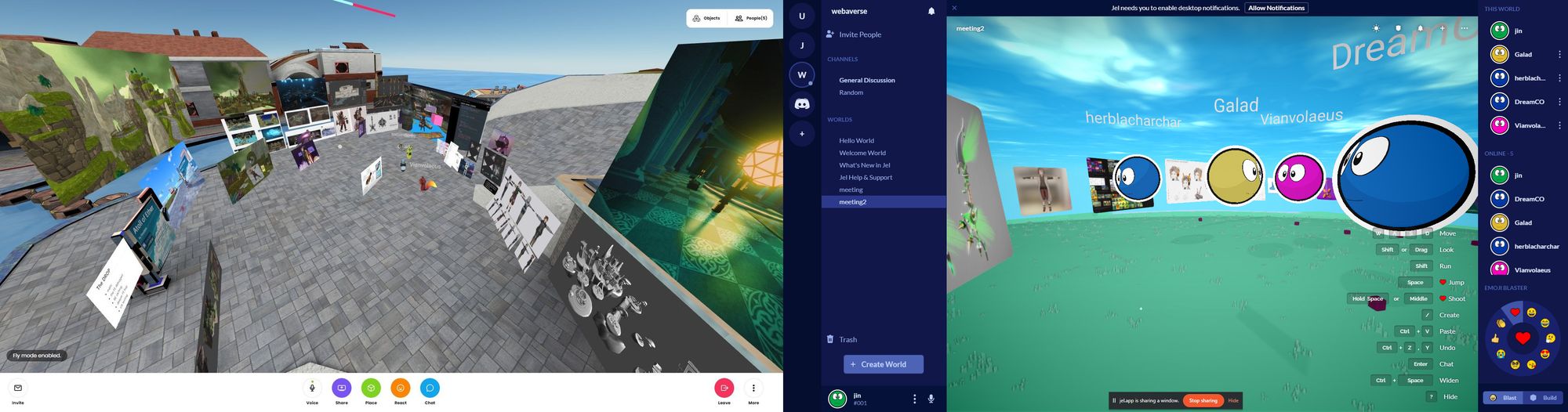

We use web based spatial software like Mozilla Hubs and Jel for the weekly synchronous meetings where creators post screenshots of what they worked on.

These sessions help everyone see the big picture come together, especially since these spaces can have persistent content between meetings.

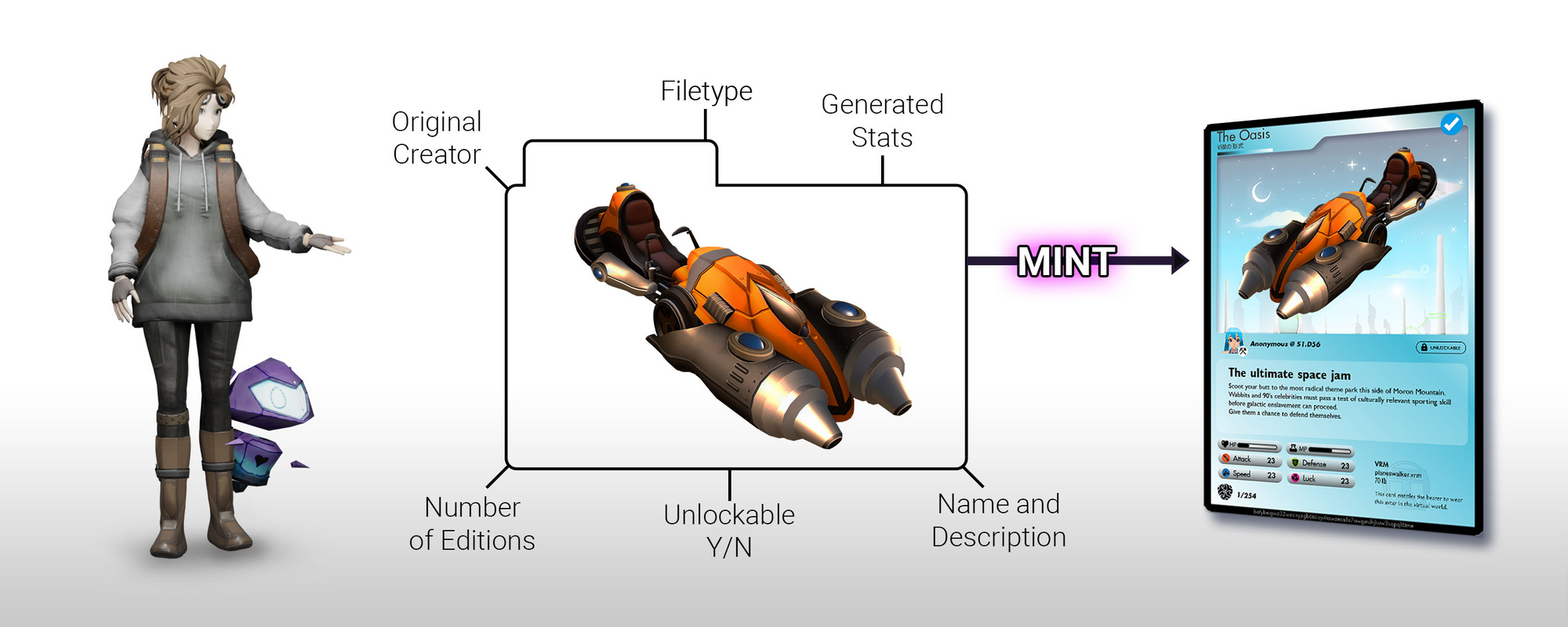

6) Minting and Distribution

The final version can be minted on https://webaverse.com/mint as a transferable NFT (we give the option for non-transferable NFTs). The preview server on the backend will automatically generate a trading card preview of the NFT with RNG battle stats for fun.

As an added bonus for the future owner, you could add concept art and accompanying file formats for metaverse compatibility as unlockable files.

Presentation

One of the last and most important steps is presentation. Creating expressions and poses with the character and 3D item helps figure out the art direction for the production and signal boost the artists work.

We're starting production on a trailer from the NFTs that are being co-created. Based on OpenAI's suggestions the chosen style is anime. GPT-3 literally kept telling us in reviews that our WIP script was an awesome anime, so we fostered those ideas.

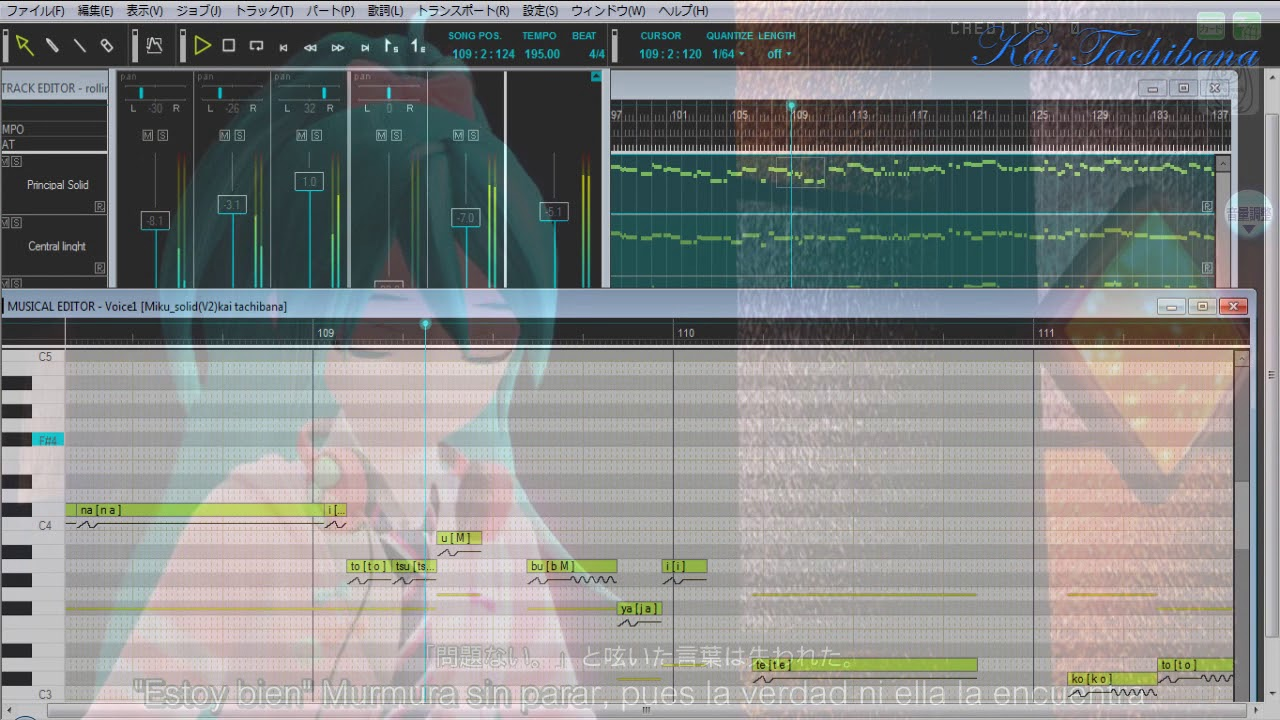

Synthetic Text to Speech

The way Hatsune Miku revolutionized co-creation of music, synthetic voice actors will do to many other types of productions and jobs. We are way past the days of Microsoft Sam, deep learning has made text to speech quality sound human.

In retrospect the success of Vocaloid was undoubtedly tied to the anime character mascot the software came with. Few months ago we started exploring open source projects that use deep learning for text-to-speech.

- https://github.com/CorentinJ/Real-Time-Voice-Cloning

- https://github.com/mozilla/TTS

- https://github.com/Tomiinek/Multilingual_Text_to_Speech

We spent hundreds of hours training deep learning models for main characters and so far the results have been decent! We'll be sharing them soon.

New startups are popping up for building synthetic voices for your next digital assistant, video game character, or corporate video. Take a look at this example, which includes the emotional tone of voices you choose:

The time saved from tweaking the script to producing voice overs is astronomical. It can take days to get results with traditional voice acting whereas the synthetic voices are instant. When it comes to pre-production you can get a better sense of the cinematography faster through the AI and then transition to professional voice actors for final quality. Here's a real voice actor for one of the main characters:

Drastically reducing the iteration cycles between thinking of an idea and getting final pixel or voice quality is a game changer happening in Hollywood. Real-time technology is enabling story tellers more flexibility to play with new ideas whereas before it was cost and time prohibitive to even slightly deviate from the plan.

Decentralizing Hollywood

Hollywood's decline has rapidly accelerated due to Covid-19. Movies are losing power as theaters permanently close and streaming is forced to compete with the entire Internet, streaming, and popular games such as Fortnite.

"We compete with (and lose to) Fortnite more than HBO," - Netflix letter

People that work in big movie studios feel like their industry has become a soulless factory. It's been a decade of reboots, prequels, and sequels of the same old recycled content.

One reason for this period of creative stasis is that financing new ideas is difficult. It's a huge gamble for large studios to risk millions on building a new brand/story and characters when they can play it safe by catering to guaranteed base audiences.

In a sense, Hollywood has already been decentralizing. Places like Georgia and Bollywood have become other top film production hubs in the world. It's also very common for Hollywood productions to outsource and offshore in order to cut costs that its been given a term, "runaway production".

Even runaway productions are about to be disrupted by tech and work from home. Major advances in real-time game engines and virtual production have made it possible for remote teams to work the entire 3D production pipeline from home.

We designed a brand new set of ingredients and processes to produce transcendent metaverse content with, starting with a trailer. Everything about the production is made to be open and remixable: the brand guide, script, characters, environments, props, voice overs, animation, and more.

If someone wanted to remix and swap the assets to render it in a different style or engine they could. Right now we are targeting a high level of compatibility between Blender and a three.js based web client, offering as much accessibility as possible.

Future of AI in Productions

Someday future generations will grow up with AIs in the same way ours have grown up with personal computing. AI technology will take the bicycle for the mind analogy much further in reducing the latency between thinking and knowing faster than search.

This technology will be transformative as AI becomes more powerful and widely available while people learn how to best leverage it for coming up with novel brands, story lines, and even gameplay mechanics.

The risk averse studios that play it safe capitalizing on doing the same thing over and over may have the most risk of being disrupted. Indies will hugely benefit since they are less risk averse and they have to be as creative as possible in order to stand out. Such powerful tools and conditions are ripe for spawning the next major title.

Not only can the creative team use it, you could also implement the API into your project. The NPCs in your virtual world will be much smarter and conversational. AI would be in its natural digital habitat. Meeting and having a conversation with one may become a life changing event for people, especially with eye tracking in play.

Conclusion

Fellow traveler, along the journey of building bridges between virtual worlds we'll learn a great deal from the users of each platform. Each moment is an opportunity to exchange gifts, stories, learn about each others cultural values, build trust and establish connections that unite us.

Narratives help to reinforce the cultural significance of various artifacts we create and carry across these worlds across The Street.

We're seeding the decentralized metaverse with an open source fandom and asset kit that can be remixed indefinitely. More to be announced in the next post.

If you're interested in learning more, drop by our Discord.

The adventure has just begun, join us!

- avaer & jin

Discord: https://discord.gg/R5wqYhvv53

Github: https://github.com/webaverse

Twitter: https://twitter.com/webaverse

Careers: Engineers wanted